Hello Neural Network Enthusiasts, Today we will learn in this post is about perceptron model in neural networks like what actually it is and how it will use in machine learning so stay tuned with us and read this article till end so you will get the perfect solution of it.

The Perceptron Model is one of the basic and very simplest fundamental for building the blocks in the landscape of neural networks. it was invented by the frank rosenblatt in the year of 1958. The perceptron model figures the basis for more difficult models that can be used in the machine learning and an artificial intelligence on today’s era. Also perceptron model has get proved that it is been formal algorithm which is mostly used to solving the particular categorization problems. So let’s get into the deep so you can understand that what exastly the perceptron is and how it will be works.

Table of Contents

What is Perceptron Model?

Perceptron is nothing but an artifical neuron which works in machine learning. Perceptron is made to emulate the way a like biological neuron works, which are taking the more than two or we can say multiple inputs and giving a single output. It is also use in specifically binary classification related tasks where it can thinks whether an input as in pertains to one class or another class. Also it hardly uses various types of an artifical neurons which are known as threshold logic units.

Types of Perceptron Model

Single Layer Perceptron Model: This type of perceptron model is definite to learns linearly seprable designs or we can say the patterns. it is very practical for tasks which where the data can be seprates into the different types/category by the straight line.

Multi Layer Perceptron Model: This type of perceptron model is having the capacity as they can tally of two or more than two layers and proficient at managing more difficult patterns/designs within the provided data.

Basic Factors of Perceptron Model

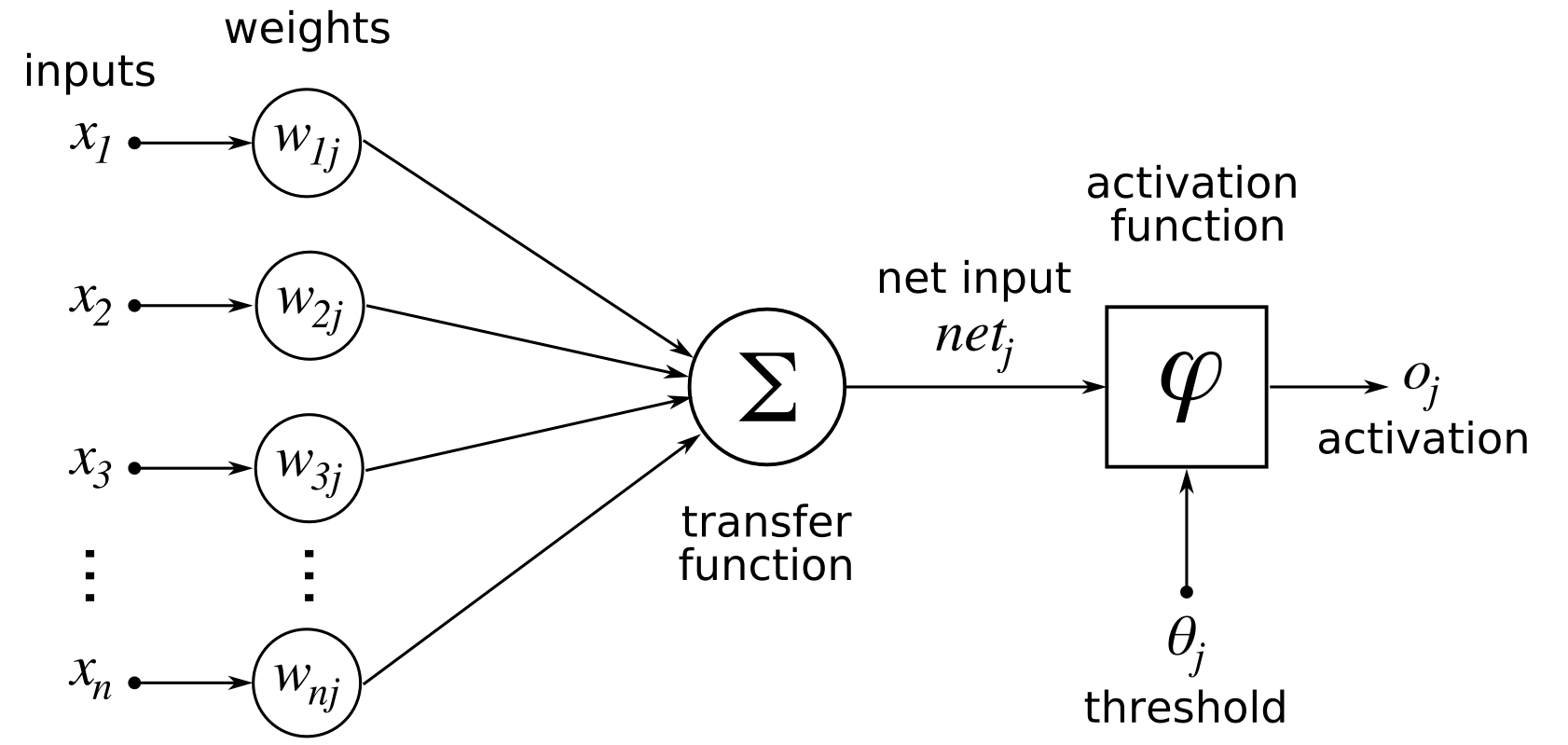

The perceptron or the basic model of an artifical neural network includes the required factors that can merge with the processing an information.

- Input Features/Functions: The perceptron model is taking the various functions where the each of the inputs presents the characteristics of that inputs data.

- Output: The final output of the perceptron model is been set by an activation function’s outcomes or we can say by that resut.

- Weights: Each of the input function is connected with a weight, refereeing the meaning of that each input function in prompting the output of perceptrons. During the period of training, these weights are used to learn the optimal values.

- Bias: The bias word or the bias is frequently received in the perceptron model. Bais gives the power that make changes to the model which can be potent of the inputs. it is the extra params that will going to trained.

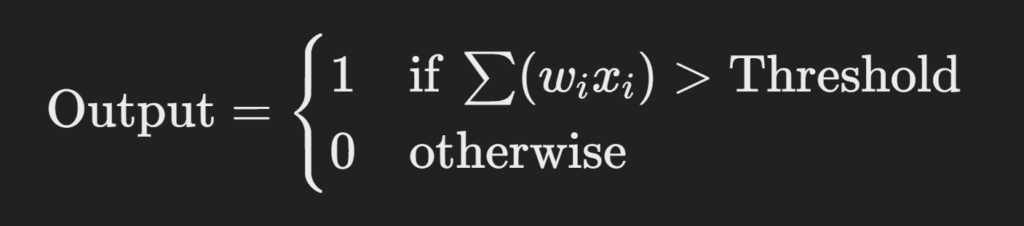

- Activation Function: The weighted total is subsequently introduced towards an activation function. Perceptron makes the use of heaviside step function. It also takes the total numbers as an input and after that compares them to the threshold and provides the returns either 0 or 1.

- Summation Function: It makes the use of summation function to figuring out the weighted sum of its inputs and also it’s provides the inputs and their weights to produce a weighted sum.

These all the major factors of perceptron that works together and it is allows a perceptron to learn and making the predictions accordingly. While a single perceptron can do the binary classification and the more sophisticated tasks that been need to take advantage of different perceptrons clustered into layers so which creates a neural network.

How does Perceptron Model Works in Neural Network?

A perceptron model taking the so many binary inputs and after it’s applies their according weights to them, also sums them up and after that it applies an activation function to generate a binary output. So let’s we understand that all process step by step:

Input & Weights: A perceptron model gets the multiple inputs so an every input is connected with a weight that affects the input’s importance. These weights are been setted during the process of perceptron learning.

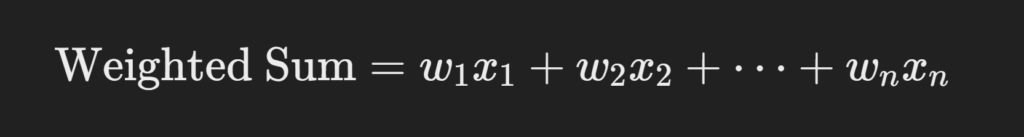

Weighted Sum: A perceptron calculates the weighted sums of that given inputs and this is done by the multiplying the every input by it’s corresponding weight so it can be summing up to all these products.

Where the w1, w2, w3 that all are the weights and the x1, x2, x3 that all are the inputs.

Activation Function: A perceptron model applies an activation function to the given or the choosen weighted sums. Generally, it is used an activation function is like the same as step function which outputs might 1 if that weight is having the sum is greater than a particular thresold and otherwise it will be 0.

We hope that now you will get an idea of that how it going to use so now we are going to train that perceptron model in such steps so follow that all:

Training a Perceptron Model

A training the perceptron model is all about the setting the weights of an errors which is in the output and this process is known as the rule of the perceptron training. So now let we discusses that all steps how that are as follows:

Confuguring Weights: It will starts from the random weights or you can select the weights accordingly whatever you want.

Calculating an output: For an every inputs, it will calculating the outputs using that having current weights which was selected.

Updating the weights: Like if an output is not correct then again we are going to update the weights for the reducing the erros and after that the weight updates the rules is:

wi = wi + Δ wi,

where,

Δ wi = η ( Y – Ŷ ) xi

Here,

η is nothing but the learning rate,

y is an actual output,

Ŷ is the predicted output

xi is nothing but an input

This process is remains the same till the output error which we will receives that reduced.

How it can be Implemented in Code?

For the making the single layer perceptron model, here we are going to discussed the steps to implement this in code so follows that:

- Decide or set the learning rate, here we are going to consider +1 for the bias which is the weight values of the number.

- Now you have to define the first linear layer.

- Once you done with that first linear layer then you have to define an activation function. In this case we are going to use the heaviside Step function.

- After that you have to defines the predication and loss function.

- Once it all are done then define the training module part where the weight and bias are been updating accordingly.

- And the last step is the define the feeting.

Applications & Limitations of Perceptron Model

Applications of Perceptron Model:

Basic perceptron model is having the limit to the linearly seprated problems but it is having the foundation for the more difficult neural network models. So here we are going to discussed some basics applications which can includes:

- Binary Classification

- Pattern Recognition

- Image Recognition

- Descison Making

- Natural Language Processing

Limitations of Perceptron Model:

The perceptron having it’s own limitations like it can only deal with linearly separable problems, subsequently it conflicts with complex and nonlinear data. However, these restrictions achieved the way for the formation of multi-layer perceptrons and it will be more complicated neural network configurations.

Conclusion

The perceptron is a very major subject in the field of neural networks and machine learning. Learning about a perceptron that how actually works is learn as a subject and it’s uses lays the foundation for researching more complex concepts and methods in artificial intelligence. Also, Researchers and engineers makes complex neural networks that today’s AI technology by the building on the perceptron’s powerful principles.

We hopefully think that after following this article till the end you will definetly get an idea of perceptron model as we discussed, so still if you are having any concerns related to this then you can freely reach us or comment us!

Frequently Asked Questions

1. What is a Perceptron in Neural Networks?

The Perceptron Model is the basic type of artificial neural network which is using for binary classification tasks. It consists of a single layer of output nodes which is connected to an input layer, where the input data is processed and classified based on a weighted sum of inputs.

2. How does the Perceptron Model work?

The Perceptron Model works by the taking inputs, multiplying them by associated weights and summing these weighted inputs. After that it’s applying to an activation function to decide the output.

3. What are the main components of a Perceptron?

Inputs, Bias, Weights and an activation function are the main components of the perceptron model.

4. What is the activation function in a Perceptron?

The activation function in a Perceptron is basically a step function. If the weighted sum of inputs is more than certain threshold then the function outputs a 1, otherwise it will be a 0.

5. Can a Perceptron solve non-linear problems?

No, a single-layer Perceptron can only solve linearly separable problems.

6. What is the learning rule in a Perceptron?

The Perceptron learning rule is an algorithm which is used to adjusting the weights and bias during training.

7. What is the difference between a Perceptron and a Neuron in more complex neural networks?

A Perceptron is a single-layer neuron model used for binary classification, while if we talk about the neurons in more complex networks then like it is in Multi-Layer Perceptrons (MLPs) which are organized in layers and can have non-linear activation functions.

8. What are some practical applications of the Perceptron Model?

Spam Detection, Image Classfication, Sentiment analysis and any more.

9. How is the Perceptron related to modern deep learning models?

The Perceptron is the foundation building block of more complex neural networks. While the modern deep learning models have generally using the multiple layers, non-linear activation functions and advanced optimization techniques.